For many years, Moore’s Law has been a guiding precept in the semiconductor industry. Coined with the aid of Gordon Moore, co-founding father of Intel, in 1965, it predicted that the sort of transistors on a microchip might double about every years, main to a corresponding growth in computing strength. However, as we approach the physical limits of silicon-primarily based era, the employer is witnessing a big shift. Modern computer structures are beginning to defy Moore’s Law through innovative processes and breakthroughs that go beyond traditional scaling. Let’s delve into how computers are breaking Moore’s Law and what this indicates for the destiny of computing.

- The Physical Limits of Silicon

Silicon has been the backbone of semiconductor technology for over half a century. However, as transistors shrink to nanometer scales, several physical challenges arise:

Quantum Tunneling: At very small scales, electrons can tunnel through insulating barriers, causing leakage currents and power inefficiencies.

Heat Dissipation: Smaller transistors generate more heat, which is difficult to dissipate effectively, leading to thermal management issues.

Manufacturing Precision: Achieving the precise control needed to produce sub-5nm transistors is increasingly complex and expensive.

These challenges have slowed the pace of transistor scaling, leading many to declare that Moore’s Law is nearing its end.

- Architectural Innovations

To overcome the limitations of transistor scaling, computer architects are exploring new ways to enhance performance:

Multi-Core Processors: Instead of increasing the speed of a single core, modern CPUs incorporate multiple cores that can process tasks in parallel. This approach effectively boosts performance without relying solely on transistor scaling.

Heterogeneous Computing: Combining different types of processors (e.g., CPUs, GPUs, and specialized accelerators) in a single system allows for optimized performance across diverse workloads. For instance, GPUs excel at parallel processing tasks like graphics rendering and AI training.

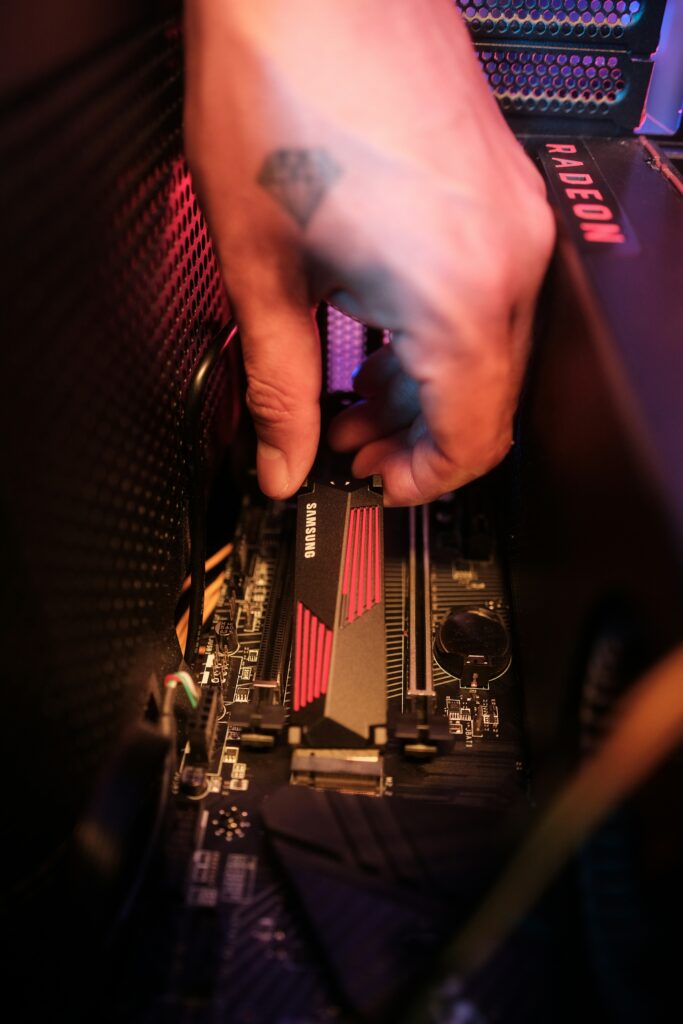

Chiplet Design: Breaking down a large chip into smaller, interconnected “chiplets” can improve yield and reduce costs. Companies like AMD have successfully implemented this approach in their Ryzen and EPYC processors.

- Advancements in Materials Science

Researchers are investigating new materials to update or complement silicon in semiconductors:

Gallium Nitride (GaN) and Silicon Carbide (SiC): These materials offer superior electrical houses and may perform at higher voltages and temperatures, making them perfect for strength electronics and RF packages.

Graphene and Carbon Nanotubes: These carbon-based totally materials exhibit remarkable electric conductivity and mechanical strength. They hold promise for subsequent-era transistors and interconnects.

Photonic Materials: Leveraging light instead of electrons for facts transmission within chips can appreciably reduce electricity intake and increase speed. Silicon photonics is an rising subject that integrates photonic circuits with conventional silicon technology.

- Quantum Computing

Quantum computing represents an intensive departure from classical computing paradigms. By leveraging the standards of quantum mechanics, quantum computers can carry out positive computations exponentially faster than classical computers:

Qubits and Superposition: Unlike classical bits, which can be either zero or 1, qubits can exist in multiple states simultaneously (superposition), enabling huge parallelism.

Entanglement and Quantum Gates: Quantum entanglement and gates permit qubits to engage in ways that facilitate complex computations past the attain of classical systems.

While nonetheless in its infancy, quantum computing holds the ability to revolutionize fields like cryptography, substances science, and drug discovery.

- Neuromorphic Computing

Neuromorphic computing seeks to mimic the architecture and functioning of the human brain to achieve unprecedented levels of efficiency and performance:

Spiking Neural Networks: Unlike traditional neural networks that process information in a continuous flow, spiking neural networks communicate via discrete spikes, similar to biological neurons. This approach can lead to more efficient computation and lower power consumption.

Brain-Inspired Chips: Companies like Intel and IBM are developing neuromorphic chips (e.g., Intel’s Loihi) that emulate neural structures and processes. These chips are designed for tasks such as pattern recognition, sensory processing, and real-time learning.

- Advanced Packaging Technologies

Innovations in packaging technologies are also contributing to the breaking of Moore’s Law:

3D Integration: Stacking multiple layers of transistors vertically (3D stacking) can significantly increase transistor density and performance without shrinking individual transistors. This approach improves signal latency and power efficiency.

System-in-Package (SiP): Integrating multiple components (e.g., processors, memory, and sensors) into a single package can enhance performance and reduce the size and complexity of electronic systems.

Conclusion

While Moore’s Law may be reaching its practical limits, the relentless drive for increased computing power continues unabated. Through architectural innovations, new materials, quantum and neuromorphic computing, and advanced packaging technologies, the semiconductor industry is finding ways to break free from the constraints of traditional scaling. These advancements are not only ensuring the continued growth of computing capabilities but are also opening up new horizons for what is possible in technology. As we venture into this new era, the future of computing looks more promising and exciting than ever.